Over the years I am sure everyone has a good story to tell about a customer or provider.

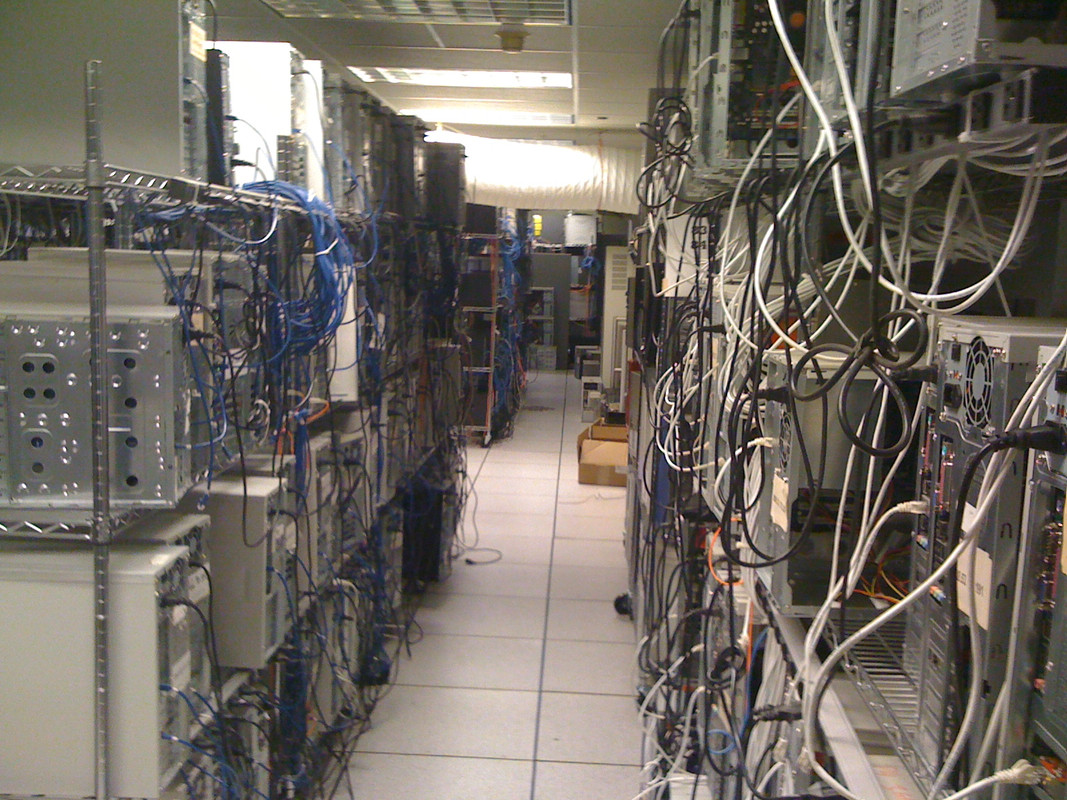

One of my favorite stories to tell is about a time I toured a data center looking for a colo solution. This is long before we had our own data centers. As we walked through the facility I noticed a puddle of water on the raised floor and when I asked about it I was told verbatim “ah, that’s just water and nothing to worry about.” I was like “um, it is actually something to worry about and why I just asked you about it.” As we moved on I noticed it was quite warm and there was no air-flow coming out of the raised-floor perforated tiles. The answer I was given was…wait for it…”that particular tile is for the air return and why you don’t feel air coming out”. Me- “Wait what…so you guys are able to push and pull air under the same raised floor?..and shouldn’t the return be up high where the hot air is? And even if you were somehow using the raised floor as a return that doesn’t answer why the heck is it so hot in here?” So they basically tried to pass off their ACs not working resulting in no air coming out of the floor as the raised floor being used as the return. I was flabbergasted. The blatant bs was so easy to disprove it was frankly awkward. I got the heck out of there and long story short the tour did not go well for anyone.