This discussion has me curious so I tested my Centmin Mod LEMP stack with PHP-FPM unix sockets on CentOS 7.8 64bit and with PHP 8.0.0beta3 and scaling is way better than I remember unix sockets to be for at least hello.php tests. Managed to push to 60,000 concurrent users with wrk-cmm with bind source IP mode to workaround port exhaustion

wrk-cmm -b 127.0.0.1/27 -t1 -c60000 -d15s --latency --breakout http://localhost/hello.php; ss -s; netstat -l | grep php;

Running 15s test @ http://localhost/hello.php

1 threads and 60000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 1.66s 227.26ms 1.79s 88.34%

Connect 968.04ms 424.74ms 1.75s 58.10%

TTFB 1.66s 227.26ms 1.79s 88.34%

TTLB 2.44us 0.85us 35.00us 94.26%

Req/Sec 34.23k 2.78k 41.39k 91.67%

Latency Distribution

50% 1.74s

75% 1.76s

90% 1.78s

95% 1.78s

99% 1.78s

422717 requests in 15.10s, 134.24MB read

Requests/sec: 27994.36

Transfer/sec: 8.89MB

Total: 7122 (kernel 9319)

TCP: 107998 (estab 2, closed 102807, orphaned 1740, synrecv 0, timewait 102807/0), ports 0

Transport Total IP IPv6

* 9319 - -

RAW 0 0 0

UDP 2 1 1

TCP 5191 5184 7

INET 5193 5185 8

FRAG 0 0 0

unix 2 [ ACC ] STREAM LISTENING 34904346 /var/run/php-fpm/php-fpm.sock

php -v

PHP 8.0.0beta3 (cli) (built: Sep 1 2020 20:07:51) ( NTS )

Copyright (c) The PHP Group

Zend Engine v4.0.0-dev, Copyright (c) Zend Technologies

with Zend OPcache v8.0.0beta3, Copyright (c), by Zend Technologies

Looks like PHP 8.0.0beta3 is scaling way better than PHP 7.4.9. For PHP 8.0.0 beta3 unix sockets pushed to 60,000 concurrent hello.php requests without errors and TCP on port 9000 pushed to 50,000 concurrent hello.php requests without errors

wrk-cmm -b 127.0.0.1/27 -t1 -c50000 -d15s --latency --breakout http://localhost/hello.php; ss -s; netstat -plant | grep php;

Running 15s test @ http://localhost/hello.php

1 threads and 50000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 1.78s 244.26ms 1.99s 88.86%

Connect 815.33ms 357.13ms 1.45s 57.62%

TTFB 1.78s 244.26ms 1.99s 88.86%

TTLB 2.62us 1.11us 136.00us 94.82%

Req/Sec 26.60k 2.63k 28.18k 95.24%

Latency Distribution

50% 1.85s

75% 1.87s

90% 1.89s

95% 1.97s

99% 1.98s

338359 requests in 15.11s, 107.45MB read

Requests/sec: 22388.07

Transfer/sec: 7.11MB

Total: 3925 (kernel 5073)

TCP: 153200 (estab 3573, closed 101428, orphaned 4683, synrecv 0, timewait 101427/0), ports 0

Transport Total IP IPv6

* 5073 - -

RAW 0 0 0

UDP 4 3 1

TCP 51772 51766 6

INET 51776 51769 7

FRAG 0 0 0

tcp 41715 0 127.0.0.1:9000 0.0.0.0:* LISTEN 15096/php-fpm: mast

PHP 8 is dam fine !

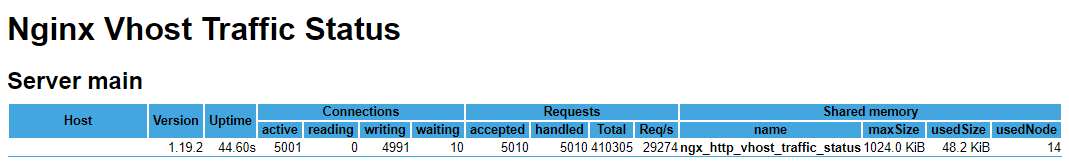

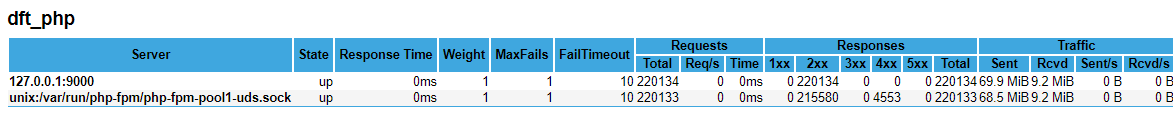

edit: at 5,000 user concurrency tested TCP vs Unix Socket PHP 8.0.0beta3 again and wrk-cmm doesn’t show errors but enabling Nginx vhosts stats and monitoring my upstreams, I see nginx is recording unix socket errors in 4xx which according to logs is 499 errors. While PHP-FPM TCP ran without errors.

edit:

For completeness, tested with latest hey too, seems unix sockets is showing good performance and scalability for once compared to TCP for PHP-FPM. Previous tests haven’t shown such good scalability for concurrent users for unix sockets !

hey non-https benchmarks with 5,000 concurrent users and 100k requests and 1 cpu core

PHP-FPM Unix sockets ~1.39% faster than TCP

TCP

hey -cpus 1 -n 100000 -c 5000 http://localhost/hello.php

Summary:

Total: 16.2777 secs

Slowest: 3.1469 secs

Fastest: 0.0011 secs

Average: 0.7833 secs

Requests/sec: 6143.3903

Response time histogram:

0.001 [1] |

0.316 [635] |

0.630 [3924] |■■

0.945 [91507] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

1.259 [39] |

1.574 [2474] |■

1.889 [1204] |■

2.203 [60] |

2.518 [153] |

2.832 [0] |

3.147 [3] |

Latency distribution:

10% in 0.6540 secs

25% in 0.7340 secs

50% in 0.7829 secs

75% in 0.7999 secs

90% in 0.8169 secs

95% in 0.8663 secs

99% in 1.5877 secs

Details (average, fastest, slowest):

DNS+dialup: 0.0211 secs, 0.0011 secs, 3.1469 secs

DNS-lookup: 0.0000 secs, 0.0000 secs, 0.0235 secs

req write: 0.0106 secs, 0.0000 secs, 1.2172 secs

resp wait: 0.7457 secs, 0.0010 secs, 1.6507 secs

resp read: 0.0030 secs, 0.0000 secs, 0.8266 secs

Status code distribution:

[200] 100000 responses

Unix Sockets

hey -cpus 1 -n 100000 -c 5000 http://localhost/hello.php

Summary:

Total: 16.0537 secs

Slowest: 2.9224 secs

Fastest: 0.0008 secs

Average: 0.7718 secs

Requests/sec: 6229.0776

Response time histogram:

0.001 [1] |

0.293 [476] |

0.585 [2522] |■

0.877 [92117] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

1.169 [888] |

1.462 [1624] |■

1.754 [2181] |■

2.046 [44] |

2.338 [108] |

2.630 [38] |

2.922 [1] |

Latency distribution:

10% in 0.6372 secs

25% in 0.7155 secs

50% in 0.7736 secs

75% in 0.7853 secs

90% in 0.8008 secs

95% in 0.8673 secs

99% in 1.5896 secs

Details (average, fastest, slowest):

DNS+dialup: 0.0204 secs, 0.0008 secs, 2.9224 secs

DNS-lookup: 0.0000 secs, 0.0000 secs, 0.5269 secs

req write: 0.0110 secs, 0.0000 secs, 1.2326 secs

resp wait: 0.7339 secs, 0.0007 secs, 1.8985 secs

resp read: 0.0030 secs, 0.0000 secs, 0.8072 secs

Status code distribution:

[200] 100000 responses

So really not much between then provided both or either TCP and Unix Socket setup is optimally configured.