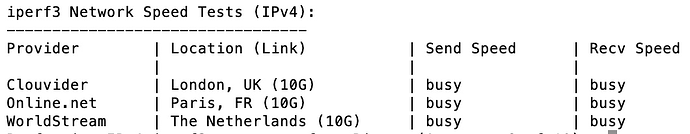

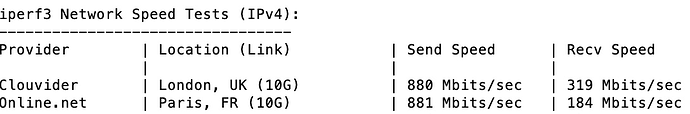

I noticed the busy clouvider servers as well, maybe we should tag Dom @Clouvider to make him aware, maybe he blocked certain IP ranges in general or maybe it’s just a lot of testing going on with all the people that got new toy during BF and holidays…

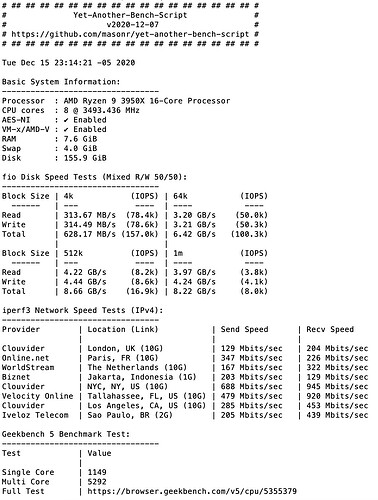

for IOps and the fio tests:

to determine the speed and performance of a disk you need to consider two main things, the highest possible throughput/bandwidth you can achieve during a constant datastream, which would be measured in MB/s or GB/s and the largest amount of operations per second possible, literally IOps…

both may be limited by different things. for the bandwidth usually that would be the bus/port/protocol aka SATA 1/2/3 vs pcie nvme and such or in case you have a network storage attached this obviously could depend on the transferrate of that connection etc.

for iops this mainly depends on the controller inside your ssd/nvme and it’s generation - harddisks will fall behind heavily in that regard because of the time head positioning will take, so mechanical limits etc.

with all that said, you might now have another look at the fio numbers. for this in general you can roughly assume blocksize * iops = bandwidth. like in your example, 4k blocksize * 1.7k iops = ~6.9MB/s or 1M blocksize * 291 iops = ~291MB/s

so, to be able to measure the former mentioned highest possible bandwidth you want to use a large blocksize like 1MB as even a smaller amount of IO should be sufficient then to reach the limit of the transferrate. vice versa to measure the best possible IOps result you need a small blocksize like 4k to be able to issue as many operations as possible without being limited by the bandwidth…

now keep in mind that there are things like filecaches, raid-caches, zfs arc caches and so on and carefully try to interpret the numbers you are seeing…

it says 7.2 TiB Disk, so this for sure is HDD. a common HDD is capable of doing something in the range of 100-180 MB/s transfer wise and also 100-180 IOps max.

based on the max 291 MB/s in the 1M section for combined read/write I’d guess you are running some RAID which usually helps and based on the 1.7k IOps in the 4k section I’d think of it being somehow cached either via hardware raid buffer or maybe you use ZFS which does some good caching on these jobs?

in general higher IOps is usually more beneficial for common hosting workloads, as most accesses are to rather small files or parts of those instead of reading/writing large files constantly all the time. SSDs and nowadays NVMe therefore are especially more important for providers, because they a) can be used for strong marketing and b) allow a higher density of customers on the same node because you won’t hit the IO limits that easily.

the 64k and 512k numbers btw are more or less additional to see if the whole thing scales quite linear or runs earlier into one or the other limit, which on a vps could be artificial…

sorry for the wall of text, but last point about AWS and azure and IOps for their different storages: I guess if you now think about the relation between iops and bandwidth it also becomes clear why artificial limits especially to IOps makes sense then. one can calculate quite good in a large scale how many even different workloads you can put on the same storage array so that they average out across the system and you still can guarantee a certain level of ressources.

hope this helps to get your mind spinning and thinking about it. also please keep in mind that this write-up is brought to you with my own words and understanding and might not be fully complete or absolutely correct. everyone feel free to jump in an add infos or correct me, if I missed something